Our team has been quietly grinding away for months on Buildbox 4, our AI game engine. With the public launch just around the corner, we’ve been tweaking our models and rolling out some cool new features to totally change what AI can do in game development.

In the lead-up to this exciting launch and beyond, we’re not just keeping our heads down; we’re committed to bringing you along on our journey, offering weekly updates, breakthroughs, and insights.

So, join us as we dive deeper into the world of Buildbox AI with this week’s milestones!

Ground Generation Model Training

This week’s achievement is finalizing the first stable version of our AI code generation project. It’s the first time we have trained a ground generation model in Buildbox 4. Previously, we relied on a model from the platform Replicate, which incurred costs. However, we can save money now by utilizing the model in serverless mode. To streamline this process, the team has begun async tasks recovering from BEAM (a tool we use for GPU serverless inference).

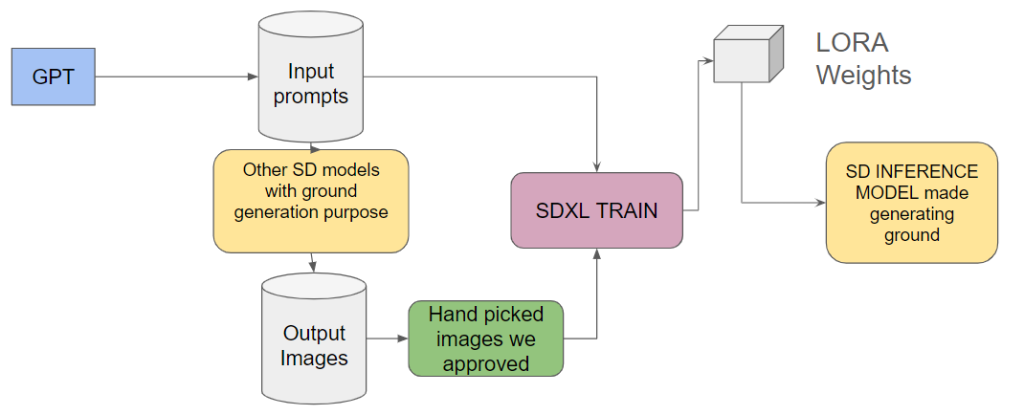

Our team trained the ground generation model using Stable Diffusion (SD) and examples from the current Replicate model using a “Teacher-Student” approach.

We started with GPT to generate hundreds of examples. Then, we passed those AI inferences to substitute that model and generated an image by using their various inputs and outputs to train our own models. This method enables learning and action patterns similar to the current model, thereby adopting a more cost-effective approach known as the “Teacher-Student” model in AI engineering.

It’s also the first time we’re using model weights (parameters that simplify machine learning data identification), which is a more efficient way of training models. They reduce the need for extensive GP usage.

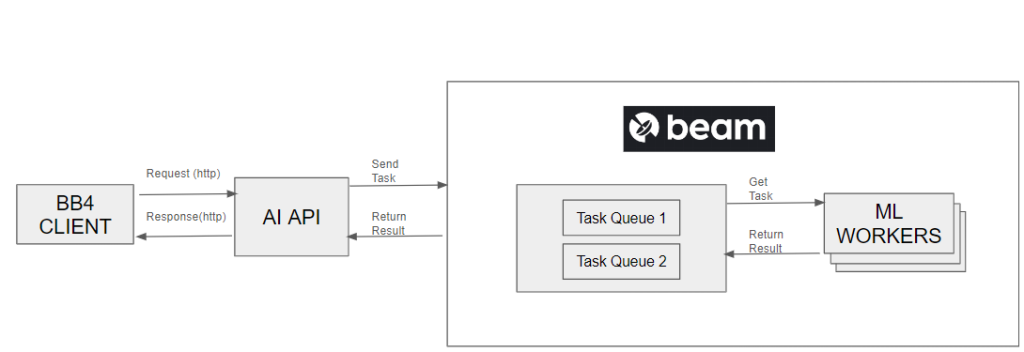

Starting ASYNC Tasks Recover From BEAM

BEAM provides task queues to retrieve model inference results that are not instantaneous. This allows us to coordinate multiple users making heavy backend requests and generating multiple responses concurrently.

The Buildbox 4 client makes HTTP requests to the AI API, and BEAM manages the workload inside it with task queues. All we need to do is send a task request and watch for that response in our channel. For example, once that image is generated, we can use it for every user and do that in a synchronized group.

AI Code Generation Progress

This week, we’ve been making some serious headway with our AI code generation project. It can now change the behavior of a single asset by creating a script node with a custom script, and this is just the beginning.

These are the features that are currently supported for the Alpha version of Buildbox 4:

- It supports basic relations with other assets, like “change color if the player runs into it”.

- It supports editing to add more functionalities to existing scripts.

- It creates custom attributes that the user can manually change without having to look at the code or the node map.

- It groups nodes with the same functionality and only shows the attributes relevant to the user.

The team also completed support for node groups so that we can show a clean interface of attributes for users.

As we continue to push the boundaries of what’s possible with AI, we’re excited about the future. The strides we’ve made in AI code generation this week are just the tip of the iceberg. We can’t wait to see where this journey takes us. Stay tuned!